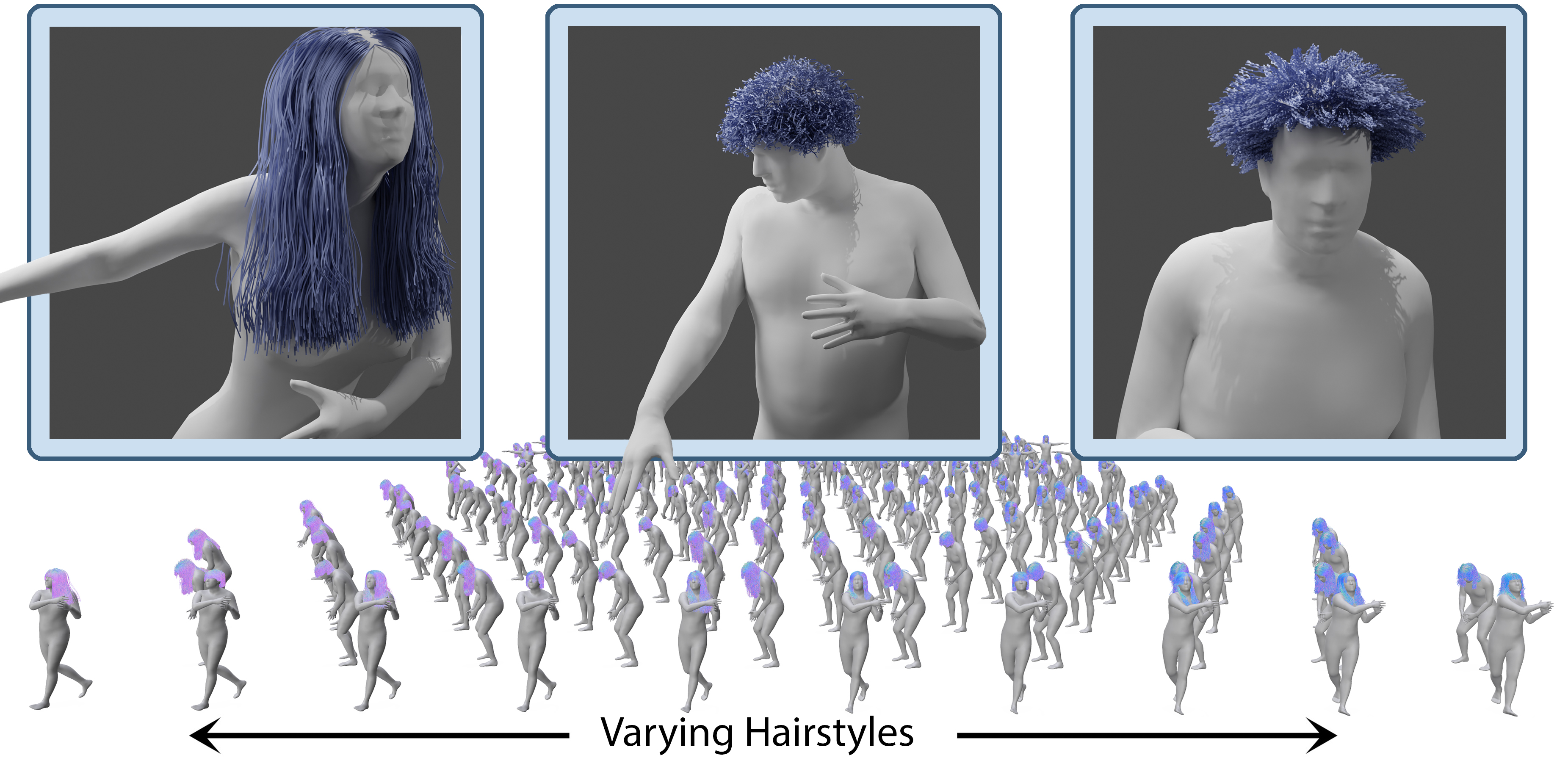

We present Quaffure, a real-time quasi-static neural hair simulator, which produces naturally draped hair in only a few milliseconds on commodity hardware, taking the hairstyle, body shape and pose into account. Our method scales to predicting the drape of 1000 hair grooms in just 0.3 seconds. Quaffure is trained using a physics-based self-supervised loss, eliminating the need for simulated training data that is costly and cumbersome to obtain. We show that our method works for a wide variety of body shapes and poses with a range of hairstyles varying from straight to curly, short to long.

Abstract

Realistic hair motion is crucial for high-quality avatars, but it is often limited by the computational resources available for real-time applications. To address this challenge, we propose a novel neural approach to predict physically plausible hair deformations that generalizes to various body poses, shapes, and hairstyles. Our model is trained using a self-supervised loss, eliminating the need for expensive data generation and storage. We demonstrate our method's effectiveness through numerous results across a wide range of pose and shape variations, showcasing its robust generalization capabilities and temporally smooth results. Our approach is highly suitable for real-time applications with an inference time of only a few milliseconds on consumer hardware and its ability to scale to predicting the drape of 1000 grooms in 0.3 seconds.

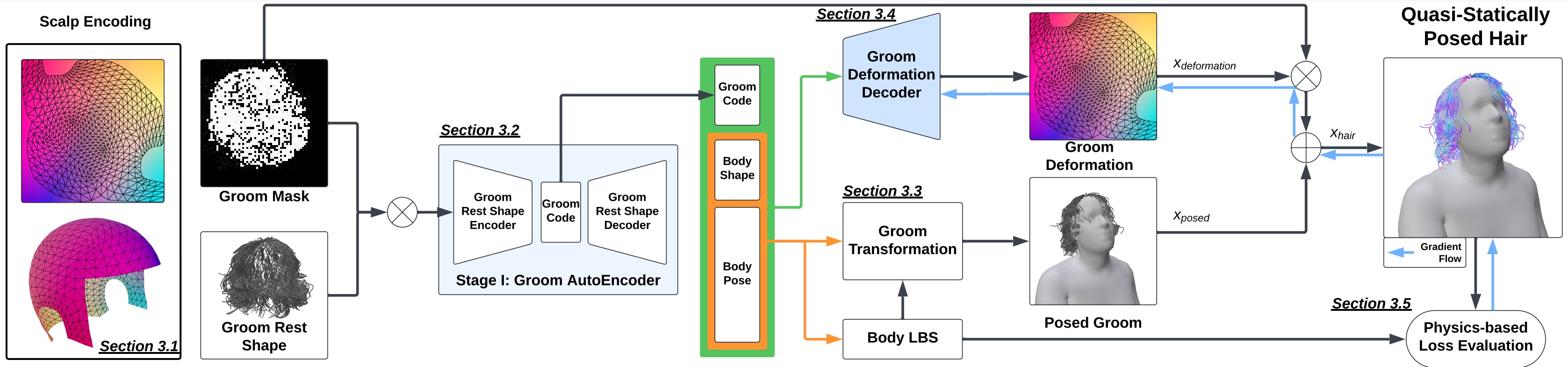

Method Overview

Our method takes a code as input, consisting of a latent code for the rest hair shape, body shape parameters, and full skeleton pose. The output is naturally draped hair produced as the sum of posed hair given the body pose and shape parameters, combined with learned corrections which are produced by the groom deformation decoder. We train our method in two stages: i) an autoencoder is trained on all hairstyles to obtain a groom latent code, and ii) the groom deformation decoder is trained in a physics-based self-supervised fashion. The hair strands are encoded in a 2D texture representation (left) where strands are encoded in the pixel in which the root particle is located. The figure shows how the 3D scalp geometry (bottom) is mapped to a high dimensional 2D texture map (top).

Results

We showcase the versatility of our method in modeling the quasi-static behavior of a plethora of hairstyles and lengths for different body shapes and poses where all results are obtained using the same settings without any manual parameter tuning. Our results cover short/medium/long hair, with various levels of curliness, including straight, wavy, curly, and kinky.